Articles | 2015 Video Processing Guide

This article is out of date! While its contents remain relevant for educational and reference purposes, this pipeline has been significantly refined. Be sure to read about the new one here when you are done with this one.

In this article I am going to elaborate on two particular pipelines I perfected in Second Generation LP's and proceeded to use throughout all videos thereafter. Throughout 2015 small bits and pieces were tweaked or researched with the goal of providing the absolute maximum benefits for my releases.

This article is written in layman's terms. I'm not a terrifically technical user. I leave the technicalities to my friends in the motherland.

I do not provide support or technical assistance for any subjects covered in my articles. Use and rely on their information at your own risk. Test your pipelines thoroughly before depending on them for mission critical content!

Introduction

The goal for producing public media is to provide an acceptable balance between quality and file size. File size is a concern because I live in Canada and use internet scarcely considered third world by most technologically established countries. Between bandwidth caps, slow speeds, and constant downtime, Canada's internet is the first major roadblock in providing content. After that, I have to be concerned about the host for the files itself. While I'd love to encode every video at 20 CRF and let my civilized friends download near lossless files, the bandwidth cost would be rather immense even if a release only saw a few hits. For the sake of my American friends, who are also subject to barbaric internet practices, I seek a balance in my content.

Pursuit of Quality

Evidently, the more compressed a video is the worse the quality will be. Compression yields its ugly head in two super major areas - Color and Motion. Youtube videos, notorious for their mediocre encodes, produce macroblocks because the amount of information each frame is able to hold is very low, and when frames change, the codec is trying to preserve information between frames to save space. This way you don't need to load an entirely 100% new frame. But when the motion estimation is not configured to look ahead in the codec, or there simply isn't enough bandwidth to spare, compression artifacts will occur.

Similarly, images with high sharpness, contrast, and grainy features present a more complex piece of information to retain more data in. Thus, motion estimation and other features of codecs become even more important, since maintaining the data between a very busy series of frames can be quite costly on both encode speed and performance. Clearly, Youtube's settings are set very low because they are more interested in quantity rather than quality, and don't want to expend server CPU on properly encoding a video when they can just puke out a blurry mess and casuals watching cat videos on their phones won't notice.

In late 2015, Nefarius notified me that there is a color matrix option for a command required by Megui. Previous to this, all Fraps encodes had slight color differences in them that only hurt quality by crushing colors, especially Greens, which in turn made compression more aggressive for those ranges. With the proper profile, however, colors are correctly 1:1 with fraps, and only codec compression remains.

Colors also get compressed. The more colors there are in an image the more space that image will occupy, since it is holding more information. Incidentally, colors get hit pretty hard in many encodes for more reasons than just compression itself. There are also colorspaces, which determine the way the codec handles colors. Some encoders, for example, will default to colorspaces intended for TV's and this can dramatically impact an encode by crushing color ranges. Such was the case for many of my videos predating the Fourth Generation, because an avisynth command had a hidden color matrix default that we knew nothing about for years.

Color banding commonly occurs when color crushing happens. This is also seen in many games that poorly compress their textures or didn't support high color depth to begin with. Color banding is more common in 8bit videos which have a more difficult time handling certain color ranges or transitions between colors, especially reds and darker shades. Near the end of the Fourth Generation HKS conducted a series of tests regarding 10bit, and these were the findings that convinced us to unanimously switch to 10bit.

www.gameproc.com/meskstuff/wibodsbasement/8vs10.7z

Notable points of interest:

- Defiance: Back of the Hulker and the ordnance strapped to his side, the gate immediately to his left.

- PlanetSide 2 #1: The sky completely loses its banding, several parts of the Sunderer pattern I use change. Pay careful attention to the cliffs

- PlanetSide 2 #2: Everything about my sentient hood ornament. Especially his crotch.

- TERA: The strip of ground I'm standing on, especially near the red effect. The effects are in general more defined with less banding.

- Metro: Last Light #1: This guys face, especially the bridge of his nose. Also note the guys face below my hand. Again, less banding overall.

- Metro: Last Light #2: The tunnel walls in front of the train. The left wall, scope, her back left of the scope. Chair frame on the right.

- FFXIV: My ear actually exists. The snow in general gains detail. Rock faces are significantly changed.

Thus, we can establish that when we encode a video we have a few simple goals to achieve.

- We must first find a balance between size and quality. The quality must not noticeably degrade in high motion, and must suit the media.

- We must ensure colors remain close to the source, and aren't noticeably crushed or compressed in the resulting file.

- We must ensure that motion blur and other post processing effects don't negatively impact an otherwise stable system.

Concepts & Methods

When encoding a video I am less worried about raw "quality" and more worried about how certain types of information impact quality. Motion is a big one. Like I said above, Motion Estimation is a pretty big subject. Motion Estimation helps the encoder look ahead of frames and estimate how to best process frames ahead of the current one so that they can still share information but in a manner in which the frames with higher motion aren't polluted as much by information from frames around them. How it allocates information is not something I fully understand, but the concept is quite simple - the codec scans the frames for changes in information and handles information according to how they change.

Motion Estimation is something the end user only really "sees" when it's done badly, like on youtube, and sudden or extensive changes in motion blur out or macroblock the image. When it's done right, those sudden changes won't result in notably degraded image quality, but the file size should remain fairly tight despite perceivably more information. It's more about how the codec is trying to handle that information. The cost for Motion Estimation is mostly on the encode side - the encoder has to work a lot harder and more exhaustively search frames and ahead of frames to handle them with more intensive settings, especially if it's on lower bitrate and only has so much information to work with and you really want to squeeze out every last drop of data.

Smaller videos demand more out of motion estimation and quality because there is less frame information to go around in general. 720p PS3 videos commonly look significantly worse than 1920x1200 PC recordings using identical settings. Blurring becomes a lot more noticeable. I will generally be forced to lower the CRF of console videos by several digits to give them more bandwidth to work with. Much is also the case with games that feature high contrast or high detailed textures. Think of CRF as a garden hose you're trying to suck balls through. The more defined the ball the bigger and more flexible your hose will need to be, and the size of the ball isn't necessarily the deciding factor. 1920x1200 encodes on games with little visual movement or noise, like Civilization 5 or Divinity: Original Sin, can easily be handed 30 CRF, which is where the codec begins to break down and is generally considered very low quality. However, these videos are often times far superior in quality to the best Youtube has to offer and are significantly smaller in filesize. Just because you're "streaming" from youtube doesn't mean you're saving bandwidth. In virtually all circumstances you are wasting it instead. The files are larger and streaming is a more computationally demanding process overall, and flash is known to be demanding on hard disks.

Avoid middle men. Send megui your straight fraps files. Don't use something like the Vegas frameserver unless it's absolutely necessary. Else you increase processing time, overhead, and potential risks for nothing. Keep it simple.

Standard Pipeline - Straight Encode

Summary: Record -> Megui -> Audio Handling -> Verify -> Release

Tools

- fraps

- megui

- avisynth batch scripter

- audition 1.5

- mkvmerge

- MPC

Recording

Fraps is very old software that has not seen a noteworthy update in over a decade. It has two very significant flaws. First of all, its codec is shit, and problems like the color matrix crushing behavior of ConvertToYV12 mentioned earlier is allegedly related to the codec. The codec is needlessly hard disk intensive when recording, and is notoriously regarded with disgust for bad design. Secondly, the way fraps handles audio is nothing short of mind bogglingly retarded. Despite this, fraps remains the only performance-conscious and reliable option for video recording, especially when you want to be ensured your mic and ingame audio are synced.

The two audio inputs for fraps have no options. You "select" devices for them by choosing default devices within windows itself. As if that isn't bad enough, the two devices get recorded into the same audio stream. That is to say you must extensively test your volume levels before making a production recording, because the direct recording from Windows audio tends to be far louder than you might expect, and it's extremely easy to make your voice inaudible even if your mic is very loud. This cannot be helped very much on the post side, so make sure your settings are correct BEFORE recording! Never disconnect your mic or other usb devices when fraps is recording, and ALWAYS check windows defaults when you do, because it tends to reset the defaults and thus reset fraps. This is easier to screw up than you think! Vent and Steam, along with other applications involving mics or recording, tend to override your mic volume in windows and screw up everything - make certain they are both set to your windows Levels manually so they don't interfere randomly.

Another note regarding audio is built-in audio chipsets have issues with syncing mics and windows audio in some cases. If you're experiencing this, don't use built-in audio. I've had that happen myself and others have reported it to me. Else, fraps generally should ensure your audio is always synced. Avoid recording your audio externally unless you test it thoroughly - every single application I've tried to do it in has screwed it all up one way or another (See: Viking: Battle for Asgard et all).

Record to a hard drive as free of data as possible to minimize fragmentation. Hard disks are physically restrained by their heads which can only be in one place at once, and their binary data access nature. More fragmentation makes the heads work harder and instigates "pausing" of activities, which can easily create significant performance loss when recording. Never record demanding video to the same drive that windows is on or an active game or otherwise demanding IO application is running on. Additionally, the slower the hard drive or the access point to it (such as a USB external enclosure), the less bandwidth you'll have for fraps. Fraps is first and foremost a hard disk intensive application, and remains the only viable software for quality recording on anything besides a super computer, upon which case you should try OBS (Open Broadcasting Software) which is not covered in this article.

OBS performs encoding in real-time, which is nice because it saves HD space, but bad because encoding is an extremely performance intensive process and anything high-res at decent settings requires enormous CPU power to keep a steady FPS with. OBS, however, has none of the audio issues fraps has by allowing you to configure devices and save them to their own audio channels! So simple, yet no one else has done it yet!

Encoding

Megui is an avisynth front-end. Avisynth is, in essence, a scripting engine that tells the X264 encoder to turn buttons and push knobs. An AVS itself is just a renamed txt file. This may sound scary at first, and at other times very time consuming, but it's actually rather simple to make even basic editing with and once you get the hang of it you can set up an encode in about 10 seconds. That said, the main caveat of this system is that, while powerful, it isn't really feesible or recommended to use it for anything requiring actual major edits, like adding transitions or text or whatever. For that, refer to Pipeline B.

I'll break encoding down into three parts. The script, the batch scripter, and megui.

AVS Script

In a nutshell I keep megui open at all times and have a "master script" I paste crap into. This saves me button presses. The master script looks like this.

AVISource("V:\DIVINITY2.avi")

ConvertToYV12(matrix="rec709")

ChangeFPS(30)

The lines and their meanings should be quite straight forward.

AVISource tells the encoder we're encoding this file. We can also use DirectShowSource for some other file types, but that's beyond the scope of this article. To append a file, we'd do this.

AVISource("V:\DIVINITY2.avi")++AVISource("V:\DIVINITY2b.avi")

Normally you can just use a +, but ++ cuts off any extra audio from the first video. While something that doesn't normally happen, we don't like taking risks. Any potential excess audio data would desync the audio in the following chunk!

ConvertToYV12 is a command I don't entirely understand, but it effectively defines the colorspace for the video. The matrix input ensures the resulting file is 1:1 with fraps in color. If we have nothing here we'll usually experience color crushing, especially in greens. While not necessarily noticeable for most users, frame to frame comparisons show the difference is quite significant.

ChangeFPS(30) should be whatever your source is set to. It makes sure any potential excess information that doesn't need to be there gets culled. We can also downsample a 60fps video to 30fps this way and it should stop the "invisible sprites" I encountered in Yoshi's Island by recording at 30 from sprites that flickered at 60fps.

Batch Scripter

Avisynth Batch Scripter is the only thing I could find that did what I wanted to do - generate a batch of crap from a directory of junk to copy paste. An extremely light weight program that does only what it needs to do. How unusual! You can basically copy paste my settings and call it a day, here. The resulting AVS files can be cut and cropped into the master file virtually instantly. Just omit the ++ on the first file. Easy.

Megui

There are far better sources for learning megui and the encoder's functions on your average search engine, so I'm going to keep this very brief.

The input file will be your AVS. Once it's in, you'll get an error if you're going to get an error. Errors can come from a lot of things, like the source formats of your various AVISource entries being at all different in resolution, the files being hosed, being too large, etc. As for how big is "too large", I don't really know. It seems to really depend on the input. There's definitely memory limits you can hit with very large or very long files. For example the Vegas frameserver can't handle anything over 3 hours or it easily starts to throw vague errors when you try to encode with megui.

Since I do post processing on most of the audio, I usually encode it to max CBR AAC, but FLAC is also a good option. For some reason you can't dump it as wav. Else I set it to very high quality VBR AAC. I read up on AC-3 vs AAC, but could find no solid reason to use AC-3 over AAC. Apparently the only major differences crop up at very very low bitrates. Never use mp3, however. Mp3 is garbage. I've had experience with ogg drifting out of sync on multiple occasions which is why I deprecated it from my pipeline.

Once the file is in, hit Preview and turn on Preview DAR. This ensures our final video has the correct aspect ratio (though you can override it in mkvmerge iirc). Why? I don't know. But a lot of my videos previously were being automatically assigned incorrect aspect ratios. You can manually define the AR in the AVS script or just flick this switch every time you go to encode. Damn .net brogrammers. Don't close this window before you hit Autoencode, or it will lose the setting. Just minimize it and relaunch it when you do another encode.

Don't use One Click. Use Autoencode. Then switch tabs and hit start.

Here's my other settings for megui. X265, at the time of this writing, produced inferior results to x264 and especially x264 10bit. The FFMS Thread Count is not fully understood, but when I messed around with it is around when I started getting secondary core timing BSOD's, and every single one occurred during encoding. This is just conjecture, but be careful with that setting.

Audio Editing & Verification

In essence I use Audition 1.5's stock compressor with a custom filter on the audio of most LP's. This non-destructively warps information of most sounds, but is focused mostly around my voice's frequencies. Ideally I'd only run it on my voice to prevent malforming the whole audio stream, but since fraps doesn't give me two devices worth of audio channels, I make due with a middleground. Following that, I replace the audio file inside the encoded video with mkvmerge.

Simply Add a video, uncheck the existing AAC audio stream, and then Add your new one. Append files to attach them to existing ones. MKVMerge is what will cause BSOD's on DXVA or blow up hipster software like Chrome at the slightest provocation. If anything is misconfigured or throws an error here it can easily twist peter inside-out. With this you can also set up multi-channel audio and other features if you would like to. MKVMerge just re-muxes the container, it doesn't re-encode any of the files.

Once I have a "final" encode I watch it through in its entirely to catch issues, like the gray half-frame corruption a segment of Conan experienced, bsods and weird timestamp issues like those that effected The Ball, and other oddities. I verify using MPC with MadVR.

Pipeline B - Edited Encode

Summary: Record -> Vegas -> Frameserver to Megui -> Verify -> Release

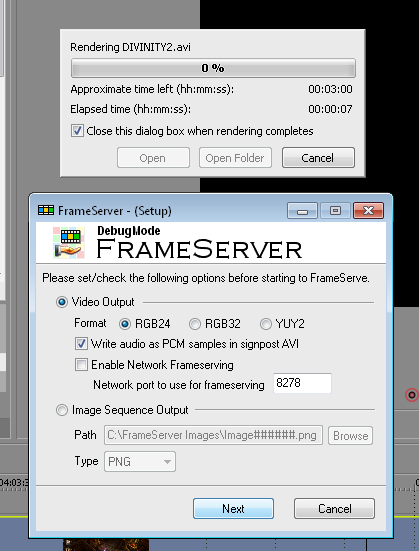

The only additional tools you need for this are Vegas and the Debugmode Frame Server plugin for Vegas.

When creating something like an XXE or my heavily edited Divinity LP, I use Sony Vegas for the actual editing. However, Vegas cannot encode for shit. It unanimously produces garbage and runs very slowly. We can fix the first problem by using a Frame Server. The search engine of your choice can provide plenty information about Debugmode Frame Server's installation, so I'll focus on Vegas and its various settings to get rid of the various things Sony puts in your way to producing a quality video.

By default, Vegas assumes you are encoding a stream from a DVD recorded from the early 2000's, and has a lot of damaging and unnecessary filters on, like de-interlacing. Ensure your file is set to Progressive and your other settings reflect a video source that doesn't want extra processing. I have not thoroughly tested the bit depth option here, as its only other setting is 32-bit and everything in the preview goes crazy with it on. Currently, according to my tests, this value seems to change nothing in the actual video (no artifacting or color changes.)

Another setting you'll want to change is disabling the "unload files when out of focus" or whatever it's called in the main settings. This commonly breaks encodes and was responsible for my weeks-long marathon trying to encode Salvation, as well as much grief with XXE's, as it considers the rendering window "out of focus" for the main window and commonly unloads stuff it actually needs to render, either crashing or producing a hosed file. Sony.

I discovered the hard way soon after I made Salvation that Vegas defaults a "resampling" option to ON whose only purpose is to create ghosting in your video. This rubbish should always be off. I believe there is a setting somewhere to permanently disable it, but a surefire way to do so for a ready to encode project is to box select your videos ONLY and then disable it in the right click menu. Never forget to do this unless you like youtube quality ghosting and mangling.

The frameserver itself, once accessed via the standard Render As option, will begin producing a Signpost file. This will be what you place as your AVISource Input in the AVS for megui. After having done so, wait for the frame server to finish making the signpost file, which may take a few minutes if it's billy big. Then you can encode, including using Preview DAR, as normal. I do the audio compression, although not as well as I'd like, inside vegas, so I set megui to VBR AAC for these. Since megui will only encode as fast as Vegas hands it frames, expect the encode to be much slower than a straight megui encode.

ARTICLE TO-DO

- ffmpeg and fixing busted crap

- links to programs if I feel it's necessary